As AI becomes more common in education, there’s a growing concern about its impact on both students and the system as a whole, especially in the United States. While AI offers convenience and efficiency, it raises serious questions like weakening critical thinking skills and encouraging cheating. The trend to use AI and get research papers for sale not only affects academic integrity but also challenges the true purpose of education: to inspire deep thinking and personal growth. In this research, written by one of our expert writers, we examine how AI shapes the educational landscape and why its influence could be more harmful than helpful.

Table of contents

Why AI Is Bad For Education

Despite the benefits that artificial intelligence (AI) can have for the educational system, it also poses significant challenges related to the ethical use of those platforms. The launch of ChatGPT in November 2022 transformed the landscape of various industries, including the education sector. This disruptive tool democratized access to AI tools, causing a large part of society to begin using them for different purposes (Baek et al. 1). Currently, 90% of students are aware of the existence of such chatbots, and 89% report that they have used them for their school assignments. Adopting generative AI systems to compose essays and other types of schoolwork creates scholarly hurdles around plagiarism, academic misconduct, and learning. 72% of college professors are concerned about the ease these utilities provide in terms of cheating (Westfall). Generative AI models in education have the potential to limit student learning and professional development as well as undermine academic integrity, leading to more cases of plagiarism and dishonesty.

How Students Are Adopting AI

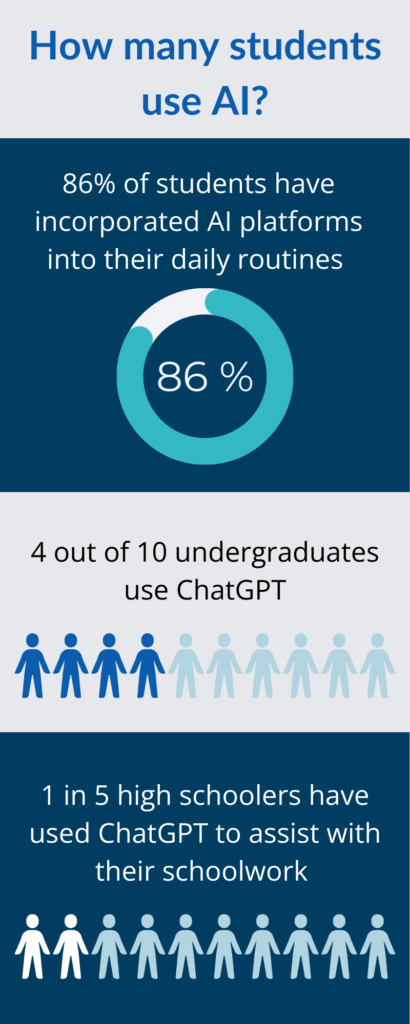

The use of AI essay checker tools among American students has seen remarkable growth over the past few years. Scholars at all educational levels use generative AI models to support their daily activities. A survey conducted by the Digital Education Council in 2024 revealed that 86% of learners have incorporated AI platforms into their daily routines. These results agree with those published by Intelligent, who found that 4 out of 10 undergraduates use ChatGPT. In addition, another study performed by the Pew Research Center discovered that one in five high schoolers have used ChatGPT to assist with their schoolwork (Sidoty and Gottfried). This data reveals that AI adoption is higher among college attendees than those at lower educational system levels. Therefore, students are actively integrating these AI systems into their academic processes.

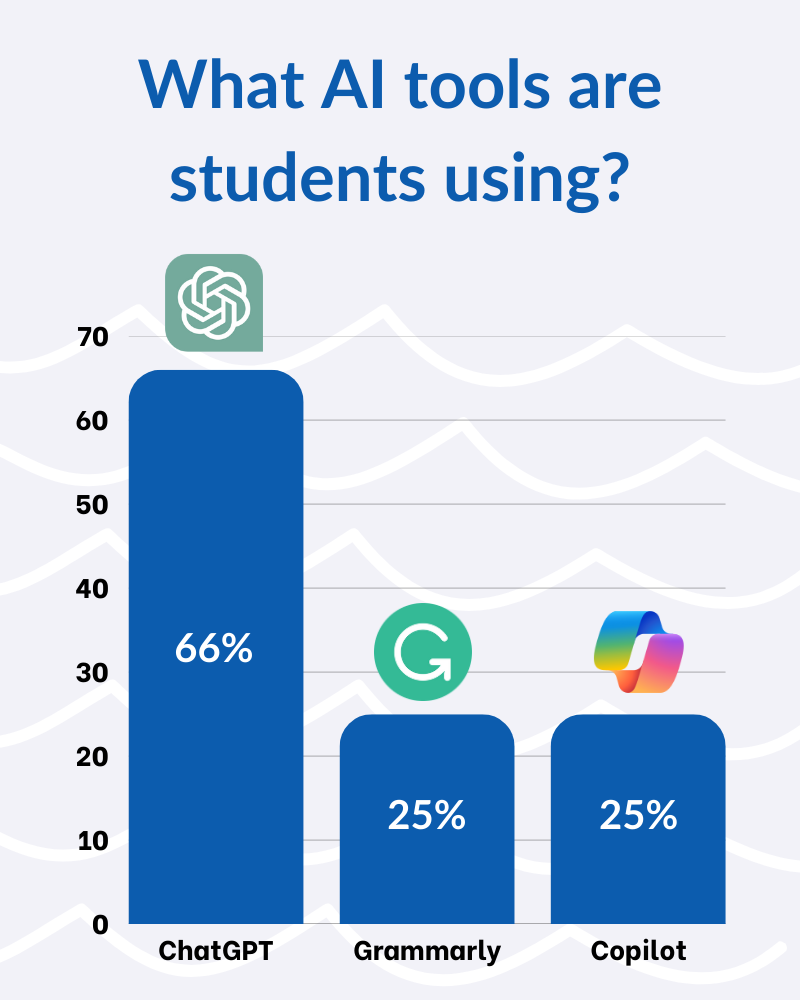

With the ample supply of AI models on the market, knowing which ones are most used by students is imperative. The Digital Education Council’s 2024 report unveiled that 66% of students use OpenAI’s ChatGPT as their primary tool. This platform is followed by Grammarly and Microsoft Copilot with 25% each. Other AI applications identified were Gemini, Perplexity, Claude AI, Blackbox, DeepL, and Canvas image generator. This research also showed that, on average, each student uses more than two AI tools. So, the scholars’ preferred solution is ChatGPT, but they are also open to incorporating new alternatives into their processes.

How Students Are Using ChatGPT in Education

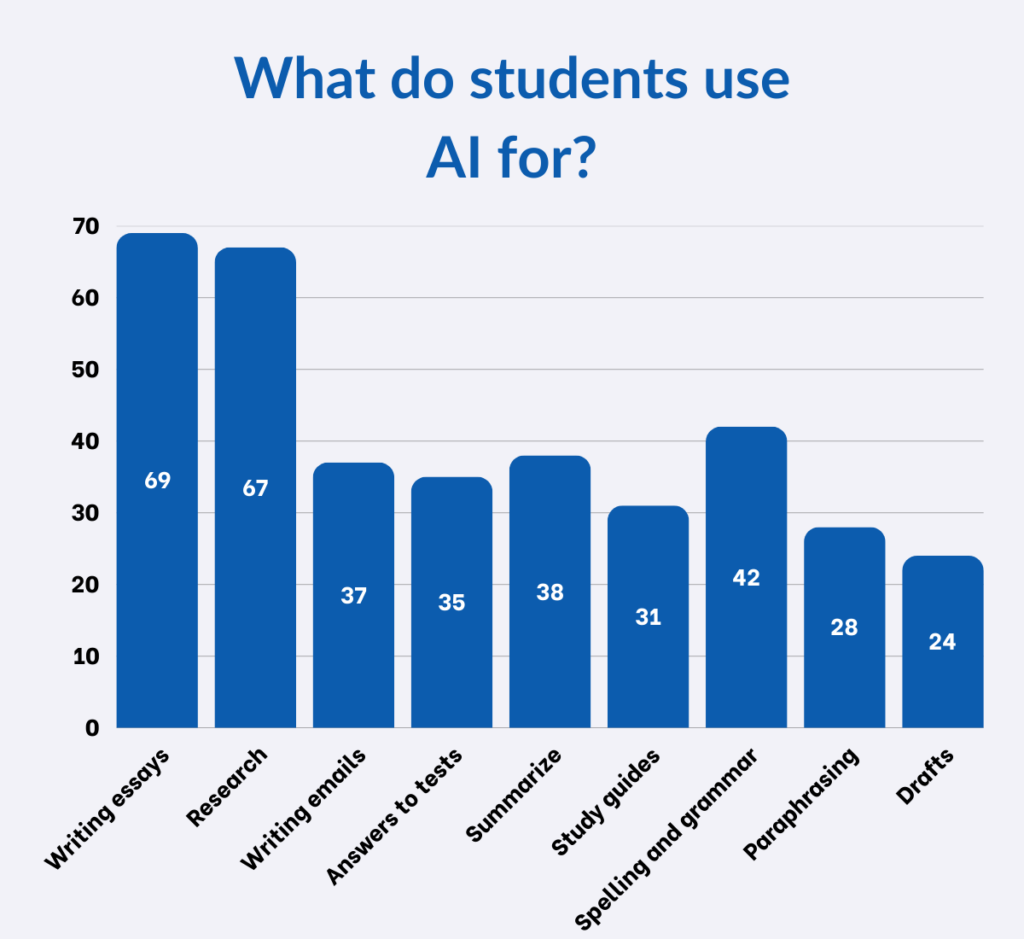

A high percentage of students use ChatGPT for their assignments. Intelligent reports that 96% of scholars who use these tools said that in the last year, they have used them rather than a college paper writing service to solve a school-related assignment. The most popular uses are writing essays (69%), conducting research (67%), writing emails (37%), and getting answers to tests and quizzes (35%). Another survey conducted by Quizlet revealed that individuals employed AI platforms to perform investigations (46%), summarize or synthesize information (38%), and generate study guides (31%). Additionally, the Digital Education Council in 2024 reported that other common uses are checking grammar and spelling (42%), paraphrasing documents (28%), and preparing drafts of papers (24%). So, there is no doubt that generative AI is transforming the way learners acquire knowledge and complete their academic work.

Writing Assignments

7 out of 10 students say AI is a crucial tool for writing assignments. 17% of respondents said they use it all the time, while 24% mentioned they utilize it most of the time. According to the Intelligent report, individuals use ChatGPT to generate ideas (75%), rewrite texts (64%), and improve grammar and spelling (54%). Additionally, 22% of scholars reported using AI generative models to obtain their papers’ outlines and small summaries for each section. Many use AI systems to support them when generating the research topic and writing the methods section (Smerdon). This data shows that generative AI models have become indispensable resources for addressing writing in the academic context, mainly because it facilitates the development process and the cohesion of writing. However, this could lead to a high dependence on technological tools. Therefore, it is necessary to raise awareness among the population about the correct use of these platforms.

Research

A large number of scholars use these tools to research new topics. Sidoty and Gottfried found that high school students explore new themes they are discussing in class with generative AI models. 7 out of 10 teenagers consider this use to be acceptable. In agreement, a study conducted by Smerdon showed that one-third of undergraduates had used these tools to prepare their papers and for research purposes. Huff indicates that in the early stages of research, AI serves to handle routine tasks and address time-consuming steps needed for investigation. Nevertheless, he suggests that students must always examine AI results and keep control of their assignments due to the high potential for bias and hallucinations in AI platforms. So, although ChatGPT and other AI applications can assist during the investigation, evaluating the outcome critically by comparing it with official and credible data sources is imperative to avoid spreading misinformation.

Exam Preparation

AI tools allow students to develop study guides and practice for tests. These models serve as virtual mentors that provide practice questions and learning activities to scholars. After answering the questions, individuals receive feedback and recommendations on materials they need to reinforce to achieve better results (Nur 136). Additionally, AI can synthesize or summarize instructional materials, optimizing time and improving study efficiency. Ray indicates that generative AI models facilitate learning by paraphrasing or restructuring information for humans to understand. By taking advantage of this functionality, undergraduates can more easily digest the complex concepts on their exams. However, learners must use AI to complement their traditional study methods, not as a substitute, because it could prevent the development of analytical skills. Therefore, students should use AI as a complementary tool for their learning and not as their only educational resource.

Personalized Tutoring

Students use AI models to develop personalized study plans. Baidoo-Anu and Ansah (55) report that these tutoring programs help create learning paths tailored to each person’s needs and intellectual level. The generative model first analyzes the student’s performance data. The AI ??then collects data on the educational activities that the users have performed and then provides lessons customized to the users’ needs and preferences. Personalized tutoring allows each student to progress and develop according to each individual’s ability to master the material. It also helps them to learn in line with their desires and abilities (Nur 140). Thus, AI-based intelligent tutoring systems offer multiple benefits, such as adapting to the learning pace, constant availability, helping to recognize areas for improvement more quickly, and providing real-time feedback for ongoing enhancement. That being said, it is necessary to understand that these systems will never be able to completely replace the empathy of a human tutor.

Interactive Learning

LLMs are used to create interactive learning scenarios. ChatGPT allows students to interact with a virtual tutor conversationally and provide real-time feedback (Baidoo-Anu and Ansah 56). Another advantage that students highlight is that it helps them solve problem-based activities, explaining in detail how they could deal with these situations in the real world step by step. In this sense, generative AI models help them create gamified or video game-style learning scenarios instead of sitting down to read or watch educational videos. Additionally, these models recommend content, help prepare lesson plans, answer students’ questions, and track progress (Baek et al.). Thus, AI can potentially optimize how users learn so that the learning process is better and more effective. However, the individual should validate these scenarios to ensure the information is correct.

Translations

Tools like ChatGPT help translate educational materials into different languages. Baidoo-Anu and Ansah (56) highlight that this functionality allows students to access more knowledge because it helps break down the language barrier. Furthermore, Li et al. assert that it is an ideal tool for learning other languages by offering real-time translations and grammar corrections, helping undergraduates improve their language skills through assessment and feedback. Conversely, scholars need to understand the relevance of assessing cultural nuances, since sometimes terms vary between countries and even slight variations can lead to misunderstandings. So, as with the rest of the functionalities, it is necessary to apply analysis and critical thinking to the responses offered by generative AI models.

Drawbacks of AI in Education

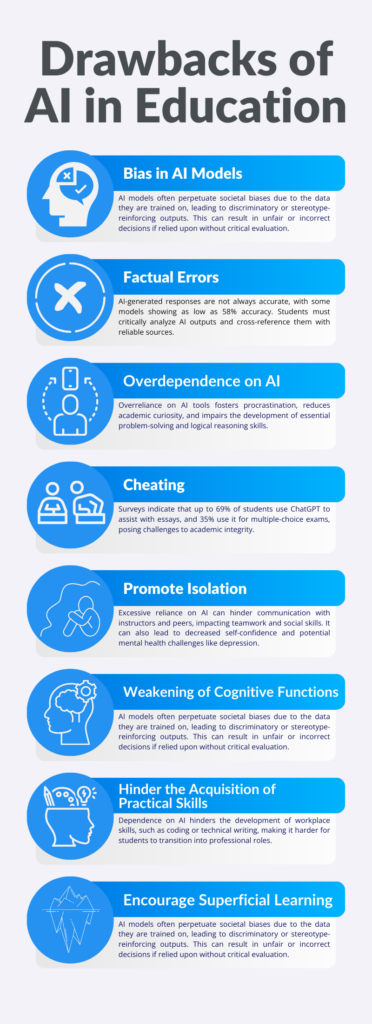

Integrating AI models into education has generated enthusiasm among some academics but has also caused great concern due to the potential adverse effects it could have. The main problems related to these models are highlighted below:

Bias in AI Models

Generative models are biased by the data they are trained on. ChatGPT and other tools have been fed data from the Internet, which means there is a high risk of algorithmic bias (Baidoo-Anu and Ansah 56). Relying on these biased results can perpetuate existing societal biases, delivering discriminatory or stereotype-reinforcing responses (Baek et al. 5). Thus, this could perpetuate or even amplify existing biases, leading to unfair, wrong, or discriminatory decisions.

Factual Errors

Accuracy is indispensable in all areas of knowledge. Baek et al. (5) report that the information delivered by ChatGPT is not always correct, which could affect the quality of the activities of those who unquestioningly trust the tool. The responses of these models may contain errors, deliver outdated information, or spread misinformation. A study conducted by Lin et al. evaluated the accuracy of the responses of various AI models to understand how accurate their responses were. The results were surprising because the most advanced model only had 58% accuracy in its responses compared to 94% accuracy in the answers elaborated by humans. It is also critical to note that this disparity could negatively impact the ability of students to discern between valid and invalid sources of information. So, it is essential that students always critically analyze the data delivered by these models as well as conduct independent research using recognized and up-to-date sources to support their claims.

Cheating

Students may potentially use AI tools to cheat on their essays and exams. A survey revealed that one in three college students has used AI to cheat on their assignments (Smerdon). King (1) claims that individuals could generate answers to their assignments through prompts and questions posed to chatbots, such as ChatGPT or Gemini. They would then copy and paste these answers into their essays to submit them as their own. 69% of students report using ChatGPT as an assistant to write their essays. Meanwhile, 29% claim to have used this chatbot to write 100% of their essays. Also, 50% admit to using this tool to write at least one complete section of their essay (Intelligent). Regarding exams, a survey conducted by Intelligent reported that 35% of students use ChatGPT to get the answers to single-choice questions. Another study found that 48% of respondents used generative AI models to answer at-home tests or quizzes (Westfall). Hence, this data reflects a growing trend in the use of AI for dishonest academic activities, which poses significant challenges to maintaining academic integrity.

Promoting Isolation

If students start interacting more with AI models than with other humans, their social skills will be affected. A growing concern is that students will turn to ChatGPT to find answers to their questions or to solve their problems. This behavior could lead to them ceasing communication with instructors or classmates, negatively impacting skills such as teamwork and the ability to discuss ideas (Rajabi et al.). It has also been documented that some mental health disorders could arise. Rajabi et al. stated that using generative AI models could cause students to have less confidence in their abilities to solve tasks. In the medium term, this would undermine their self-confidence, causing self-esteem problems that would eventually turn into depression or another mood disorder. Thus, these findings reveal the importance of raising awareness among the population about adequately using these tools to conserve mental and social well-being.

Weakening of Cognitive Functions

Using ChatGPT can weaken memory. Abbas et al. report that excessive use of these tools can negatively impact the ability to retain information. This occurs due to reducing the active mental engagement needed to consolidate learning. Additionally, these tools can cause students to disengage from active learning and begin to play a more passive role in their activities, making it difficult for them to remember information. This passive approach to education is related to students limiting themselves to only copying and pasting AI-generated responses without analyzing or reflecting on the content (Rajabi et al.). Therefore, it is essential to make students aware that active learning is necessary for their correct development.

AI tools can kill critical thinking by replacing active thought processes with automatically generated responses. These models minimize the ability to critically evaluate information by encouraging students to accept ChatGPT responses as absolute without questioning them (Rajabi et al.). Students must constantly reflect on and make inferences about the material to develop this skill.

The use of generative AI can also diminish creativity. Some research has found that the diversity and originality of AI models’ responses are limited to a few patterns (Baidoo-Anu and Ansah 57). Overrelying on ChatGPT for academic purposes could result in diminished creative ability. Indeed, students may lose their voice and authenticity, taking on the AI ??patterns as their own (Baek et al. 5). They may also lose the ability to generate ideas or proposals on their own, believing that the models provide more creative or interesting answers (Rajabi et al.). Thus, creativity should be a skill that is constantly fostered.

Overdependence on AI

Reliance on AI tools leads to maladaptive behavioral patterns such as procrastination and avoiding mental effort. Abbas et al. found that students who use ChatGPT to complete their assignments tend to leave them til the last minute because they fully trust the tool. Furthermore, they tend to put in less effort when completing them. Rajabi et al. note that individuals avoid the mental effort required to develop critical skills such as logical reasoning and problem-solving by relying on generative AI models to solve assignments. Lastly, another ramification of this situation is that students lose the ability to study independently, and their academic curiosity and capacity for continuous learning are reduced, thus negatively impacting the individual’s professional future.

Hindering the Acquisition of Practical Skills

ChatGPT can hinder the acquisition of essential practical skills for the workplace. Rajabi et al. argue that dependence on these technologies could impede the development of critical skills such as writing code in programming, solving complex mathematical problems, or writing technical reports independently. The most profound impact would be that students who depend on these technologies would have a more challenging time transitioning to the workplace. Hence, these tools would make it more difficult for those who use them to adapt to the workplace.

Encouraging Superficial Learning

Generative AI models foster surface-level learning by providing quick and seemingly correct answers. However, this practice carries the risk that students may experience difficulties understanding critical concepts in their professions. This is because they avoid deep exploration of the topics and conceptual understanding (Rajabi et al.). Thus, this could limit students’ ability to integrate and apply knowledge effectively.

The Ethical Implications of AI In Education

The use of AI could undermine academic integrity. Abbas et al. indicate that using ChatGPT in education encourages students to engage in dishonest academic practices such as plagiarism. Prothero reports that 63% of teachers reported that their students were in trouble for the abuse of generative AI in completing their schoolwork during the 2023-2024 school year. This percentage represented an increase of 48% from the previous school year. Another analysis by Copyleaks showed that in 2024, student content made by AI increased by 74%. Thus, this data reveals a growing trend of indiscriminate use of AI among students that must be addressed to avoid a negative impact on future academics.

Although marketed as objective and neutral tools, chatbots can influence how those using them think and act. Akgun and Greenhow point out that algorithms are created from positions of power and reflect the values of their creators. In this sense, the data from these algorithms can represent historical and systemic societal biases, prolonging disparities and stereotypes in how people act. So, awareness of the problem of stereotypes is essential to prevent bias.

There are also serious concerns about how AI will impact student autonomy. Akgun and Greenhow indicate that these tools would jeopardize individuals’ ability to make independent judgments, making their academic success dependent on predictive systems. Hirabayashi et al. found that 25% of students use AI to replace traditional academic activities such as making summaries or completing required class readings. This behavior shows how these tools reduce students’ active participation in learning. Therefore, making students aware of how the learning process works and the relevance of completing these tasks independently for their professional future is imperative.

Why AI Is Bad for Education

ChatGPT is increasing AI-generated content incidents at all levels of education. King (1) indicates that failing grades, expulsions, and damage to professional reputation are on the rise because these models make it easy for students to copy and paste answers that seem logical. Furthermore, this causes students to lose the ability to think critically and solve problems independently. Thus, ChatGPT in education is making people more dependent on technology.

Many students are accused of using AI in their assignments without doing so, while others who do are going undetected. Rajabi et al. indicate that AI detection tools are not as accurate as they should be, leading to students who have submitted original projects being penalized, while in other cases, AI-generated content can go undetected. Consequently, this situation puts the educational system in a state of crisis because it demands new forms of evaluation that allow students’ skills to be adequately assessed.

Conclusion

Generative AI models are limiting students’ potential to learn and develop professionally. Research has shown many reasons why AI is bad for education. First, students are becoming increasingly dependent on AI, which they use in most academic activities. This dependence weakens critical cognitive abilities such as creativity, critical thinking, and memory. In addition, having models like ChatGPT readily available causes people to procrastinate, avoid mental effort, and engage in dishonest academic practices more frequently. Together, these situations result in students not being fully prepared for the world of work or developing to their full potential. Therefore, as a student, it is necessary to regulate the use of these applications to the minimum possible and use them responsibly.

Works Cited

Abbas, Muhammad, et al. “Is It Harmful or Helpful? Examining the Causes and Consequences of Generative AI Usage Among University Students.” International Journal of Educational Technology in Higher Education, vol. 21, no. 1, Feb. 2024, https://doi.org/10.1186/s41239-024-00444-7.

Akgun, Selin, and Christine Greenhow. “Artificial Intelligence in Education: Addressing Ethical Challenges in K-12 Settings.” AI And Ethics, vol. 2, no. 3, Sept. 2021, pp. 431–40. https://doi.org/10.1007/s43681-021-00096-7.

Baek, Clare, et al. “‘ChatGPT Seems Too Good to Be True’: College Students’ Use and Perceptions of Generative AI.” Computers and Education Artificial Intelligence, Sept. 2024, p. 100294. https://doi.org/10.1016/j.caeai.2024.100294.

Baidoo-Anu, David, and Leticia Owusu Ansah. “Education in the Era of Generative Artificial Intelligence (AI): Understanding the Potential Benefits of ChatGPT in Promoting Teaching and Learning.” SSRN Electronic Journal, Jan. 2023, https://doi.org/10.2139/ssrn.4337484.

Copyleaks. “One Year Later: Copyleaks’ Data Finds 76% Spike in AI-generated Content Among Students – Copyleaks.” Copyleaks, copyleaks.com/about-us/press-releases/one-year-later-copyleaks-data-finds-76-spike-in-ai-generated-content-among-students.

Digital Education Council. “How Students Use AI: The Evolving Relationship Between AI and Higher Education.” Digital Education Council, 30 Aug. 2024, www.digitaleducationcouncil.com/post/how-students-use-ai-the-evolving-relationship-between-ai-and-higher-education. Accessed 12 Jan. 2025.

Hirabayashi, Shikoh, et al. “Harvard Undergraduate Survey on Generative AI.” arXiv (Cornell University), June 2024, https://doi.org/10.48550/arxiv.2406.00833.

Huff, Charlotte. “The Promise and Perils of Using AI for Research and Writing.” https://www.apa.org, 1 Oct. 2024, www.apa.org/topics/artificial-intelligence-machine-learning/ai-research-writing.

Intelligent. “4 In 10 College Students Are Using ChatGPT on Assignments – Intelligent.” Intelligent, 11 Mar. 2024, www.intelligent.com/4-in-10-college-students-are-using-chatgpt-on-assignments. Accessed 12 Jan. 2025.

King, Michael R. “A conversation on artificial intelligence, chatbots, and plagiarism in higher education.” Cellular and Molecular Bioengineering, vol. 16, no. 1, Jan. 2023, pp. 1–2. https://doi.org/10.1007/s12195-022-00754-8.

Li, Jiakun, et al. “Exploring the Potential of Artificial Intelligence to Enhance the Writing of English Academic Papers by Non-native English-speaking Medical Students – the Educational Application of ChatGPT.” BMC Medical Education, vol. 24, no. 1, July 2024, https://doi.org/10.1186/s12909-024-05738-y.

Lin, Stephanie, et al. “TruthfulQA: Measuring How Models Mimic Human Falsehoods.” arXiv (Cornell University), Jan. 2021, https://doi.org/10.48550/arxiv.2109.07958.

Nur, Tira. “Artificial Intelligence (AI) in Education: Using AI Tools for Teaching and Learning Process.” researchgate.net, www.researchgate.net/publication/357447234_Artificial_Intelligence_AI_In_Education_Using_AI_Tools_for_Teaching_and_Learning_Process.

Prothero, Arianna. “New Data Reveal How Many Students Are Using AI to Cheat.” Education Week, 24 Oct. 2024, www.edweek.org/technology/new-data-reveal-how-many-students-are-using-ai-to-cheat/2024/04.

Quizlet. “Quizlet’s State of AI in Education Survey Reveals Higher Education is Leading AI Adoption.” PR Newswire, 15 July 2024, www.prnewswire.com/news-releases/quizlets-state-of-ai-in-education-survey-reveals-higher-education-is-leading-ai-adoption-302195348.html?

Rajabi, Parsa, et al. “Unleashing ChatGPT’s Impact in Higher Education: Student and Faculty Perspectives.” Computers in Human Behavior Artificial Humans, vol. 2, no. 2, Aug. 2024, p. 100090. https://doi.org/10.1016/j.chbah.2024.100090.

Ray, Sussana. “Smart Ways Students Are Using AI.” Microsoft, 16 Oct. 2024, news.microsoft.com/source/features/work-life/smart-ways-students-are-using-ai. Accessed 12 Jan. 2025.

Sidoty, Olivia, and Jeffrey Gottfried. “About 1 in 5 U.S. Teens Who’ve Heard of ChatGPT Have Used It for Schoolwork.” Pew Research Center, 14 Apr. 2024, www.pewresearch.org/short-reads/2023/11/16/about-1-in-5-us-teens-whove-heard-of-chatgpt-have-used-it-for-schoolwork.

Smerdon, David. “AI In Essay-based Assessment: Student Adoption, Usage, and Performance.” Computers and Education Artificial Intelligence, vol. 7, Aug. 2024, p. 100288. https://www.sciencedirect.com/science/article/pii/S2666920X24000912?via%3Dihub.

Westfall, Chris. “Educators Battle Plagiarism as 89% of Students Admit to Using OpenAI’s ChatGPT for Homework.” Forbes, 28 Jan. 2023, www.forbes.com/sites/chriswestfall/2023/01/28/educators-battle-plagiarism-as-89-of-students-admit-to-using-open-ais-chatgpt-for-homework.